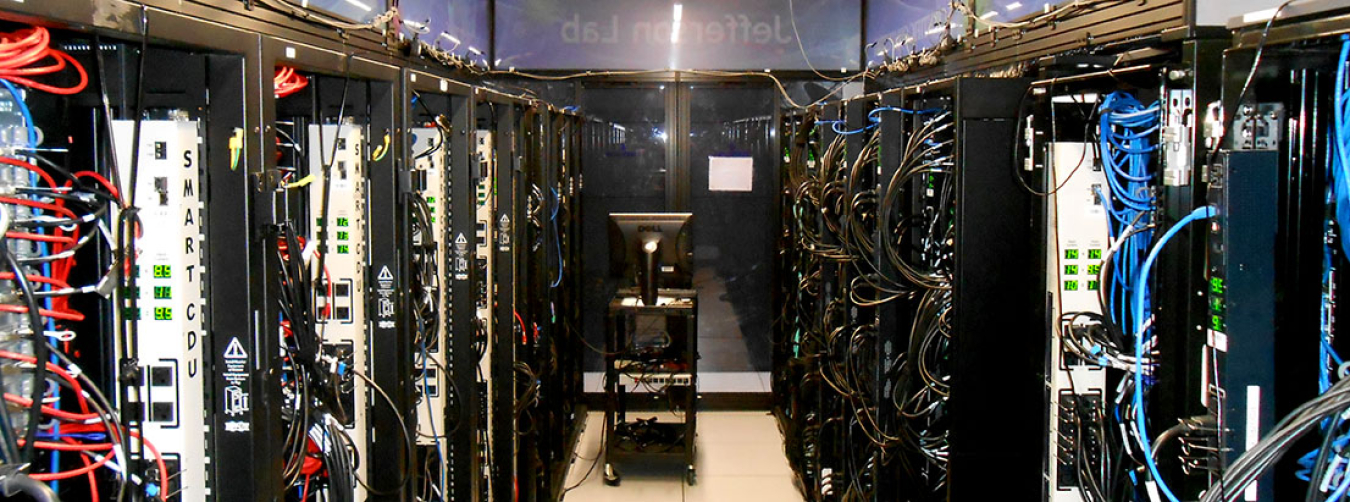

Before this project, the two separate computing systems at the Thomas Jefferson National Accelerator Facility—the High Performance and Core Computing Systems—were housed in completely different areas of the same building, using different cooling and power systems. Neither area could support expansion because of the area configurations and various cooling and power systems. Rather than continue operating this way, Jefferson Lab decided to take on an approximately $8.3 million construction project intended to optimize space, make the data center more efficient, and decrease the cost of running the computing systems.

With this project, the two computing systems were eventually housed in the same area, resulting in a calculated annual energy savings of $37,594 and reducing mechanical energy consumption by 50%. The power usage effectiveness (PUE) was also reduced to 1.27 from more than 2.

The Thomas Jefferson National Accelerator Facility improved the data center in many ways through this construction project, including introducing sealed hot aisles to prevent the mixing of hot rack exhaust air and cool supply air. The now-optimized supply-and-return air flow system minimizes mixing cool supply air, hot return air, and hot return air recirculation. This allows the data center to remain at a warmer temperature and reduce wasted energy due to overcooling. Additionally, the cooling, heating, humidification and dehumidification systems are now the most efficient systems available in the area. Direct expansion cooling units used for redundant cooling utilize an innovative “Econophase” function that pumps refrigerant through the system to reject heat without using compressors.

Also, more efficient, uninterruptible power systems and power distribution units were provided to reduce power consumption. Temperature sensors, electrical meters, and flow meters were installed to more effectively monitor equipment data and calculate the data center’s PUE in real time.

As this project occurred in tandem with data center operations, intricate and explicit coordination between staff and the construction efforts ensured that the data center continuously maintained its functionality. This was a challenge for both parties but they overcame it by coordinating data center requirements using an interdisciplinary organizational team. The team focused on maximizing project efficiency while minimizing operational interference.

The following lessons were learned during this project.

- Almost any typical data center can put in place features that Jefferson Lab utilized to reduce energy consumption. The ambient conditions of the data center, located in Newport News, Virginia, are hot and humid in the summer and cold and dry in the winter. Temperature swings are very common. If the data center here can implement effective temperature control features—for energy efficiency and energy savings—then any data center can do the same. The Federal Energy Management Program's Best Practices Guide for Energy Efficient Data Center Design is an excellent starting point when planning renovations or construction.

- Optimizing airflow is key when designing an energy-efficient, reliable data center. Engineers and computing professionals must collaborate to create a design that effectively concentrates heat loads—to optimize computing capabilities and minimize energy consumption. Open and detailed communication is needed during this process.

Currently, other data centers are not planning on replicating this project, but the Thomas Jefferson National Accelerator Facility recommends that anyone attempting a similar project should engage a strong project manager, innovators, and experienced users to provide creative input.