Program evaluations should be planned strategically, rather than on an ad hoc basis. Strategic evaluation planning means that the evaluation activity first considers what evidence is needed to inform decision making, and what questions need to be answered to help the organization obtain the evidence to improve the way it does business.

Focusing on evidence needed, questions to be answered, and decisions to be made is an important first step toward strategically planning evaluations.

One way to have useful and cost-effective evaluations is to strategically plan evaluation activity so it supports management activity cycles for planning, budgeting, analysis, program implementation, and benefits reporting and communication.

In this way, evaluations generate information to feed into critical decision processes and continuous improvement. Planning evaluations strategically allows the program to phase in a full range of evaluation activities over a period of years, and to spread out the cost of evaluation over multiple years.

A Strategic Evaluation Plan (also referred to as a Learning Agenda or Evidence-Building Plan) is seen as a living document that can be modified as the program evolves and changes over time.

- "The term 'evidence' is defined as “information produced as a result of statistical activities conducted for a statistical purpose” (given that term in section 3561 of Title 44 of the United States Code). The broader view, taken by Federal agencies, is that evidence goes beyond statistical information alone. In additional to statistical analysis results, it also includes:

- Program evaluation information

- Performance monitoring and analysis findings

- Foundational fact-finding information (e.g., indicators, descriptive statistics).

Taking a strategic view of evaluation means that you need to do the following:

- First, consider for your office or program what information is needed to provide evidence to support decision making (see Informed Decisions), and

- Develop an overall strategy and lay out a plan for collecting and analyzing data that will generate the information needed.

First, Consider Your Program's Decision Information Needs and Questions

Since the idea is to have all evaluative information working together, you might start by taking stock of your decision needs and questions that need to be addressed to obtain information necessary to support those decisions.

The illustrative table below is one way to begin to take stock of these considerations. Complete the second and third columns and decide what program areas require management attention and need to be evaluated (third column).

For your program, fill in the cells (with details on known decision points, timing needs, expected key audiences, type of evaluation, etc.) for the program areas for which you will need evaluation information to inform critical decisions.

| Management Activity Cycles | Decisions to Be Informed (Examples) | Questions Asked | Targeted Program Areas? | ||

|---|---|---|---|---|---|

| Program Area 1 | Program Area 2 | Program Area 3 | |||

| Planning | |||||

| Budget | |||||

| Analysis | |||||

| Implementing | |||||

| Benefits reporting and other communication | |||||

Second, Develop an Overall Evaluation Strategy that Includes Use of Results

Ideally, a CMEI office should have an overall evaluation strategy and supporting multi-year evaluation plan that addresses each critical program area, including a schedule for the planned evaluation activities and the resources set aside for them.

A mix of evaluation types may be necessary. For instance, the decision information needs for R&D programs might require several different types of evaluation be performed over a multi-year period, going beyond peer reviews alone.

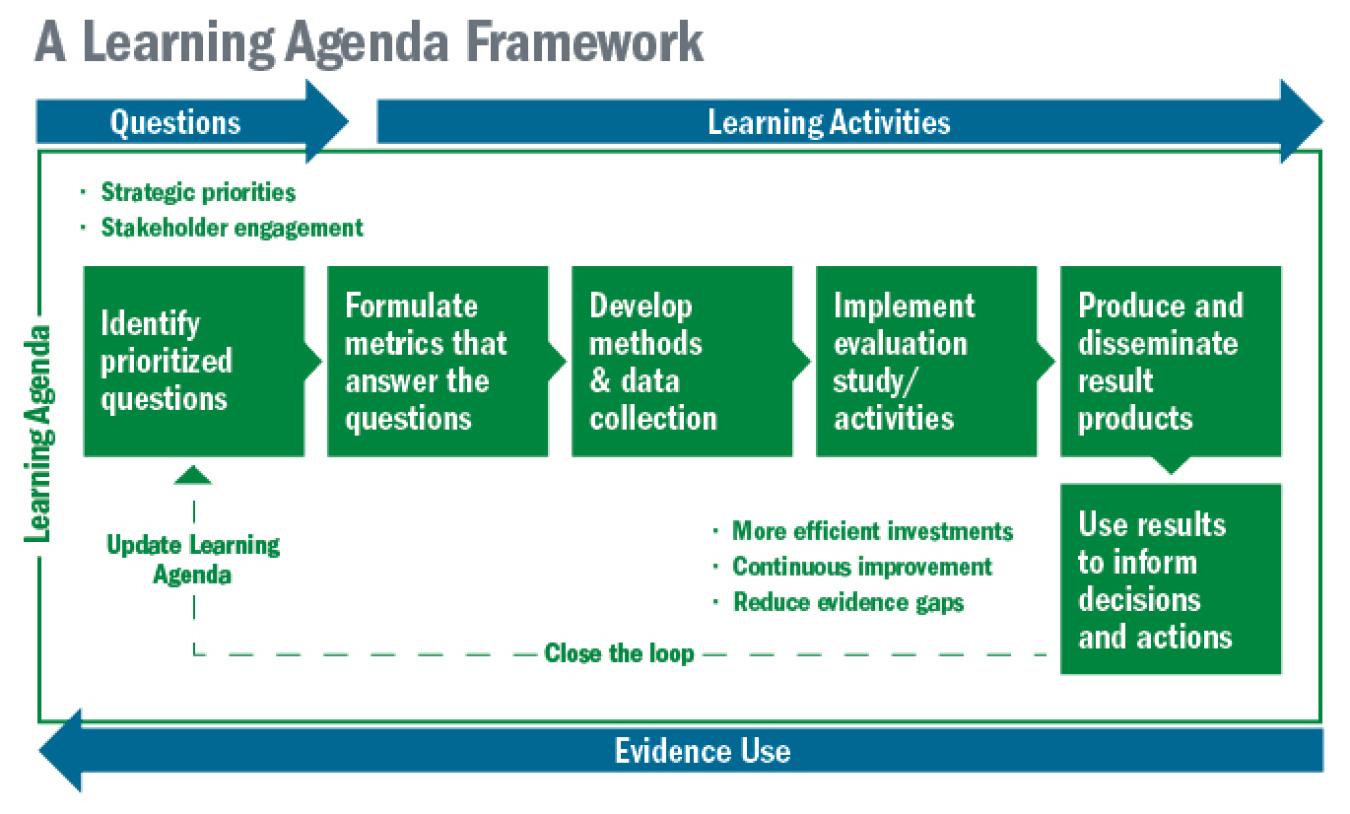

A Learning Agenda approach (illustrated below) could guide the effort to develop an evaluation plan that would be prepared strategically.

(1) Formulate Prioritized Questions

After you have clarified the decisions to be informed and generally understand the evidence needed to inform the decisions, establish your program's prioritized questions that when answered will help inform the decisions.

Developing program logic models can be especially useful here. These help capture goals in a concise way and show how these goals will be achieved, thus highlighting key evaluation questions, as well as performance metrics.

The questions developed can be prioritized based on the organization/ office strategic goals and priorities. In this way, the questions will be aligned with organizational mission and operational priorities. As evaluation funding resources may be limited, you might have to focus on the top prioritized questions.

(2) Define and Execute Evaluation Activities

Try to identify the information/evidence gaps. Formulate a set of prioritized questions that must be answered to provide the evidence to inform decisions.

- Concentrate evaluation activities on areas in your program strategy where there are gaps in information/evidence needed to inform decisions.

- With metrics identified from the program logic and the questions being asked, collect data and develop the quantitative or qualitative methods necessary to obtain the answers to the questions. A couple of examples of the kinds of data and methods that could be used (depending on evaluation needs) are:

- Data:

- Surveys of probability sample populations

- Measurement and evaluation (M&V)

- Semi-structured interviews

- Expert elicitation

- Database mining

- Use of administrative data

- Methods:

- Evaluation research designs (e.g., Randomized Control Trials, Quasi-experimental, counterfactual analysis techniques)

- Statistical analysis

- Text analytics

- Regression discontinuity analysis

- Cluster analysis

- Discounted cash flow valuation method to estimate the economic value of funding

- Mixed method analysis techniques

- Case study approach

- Data:

(3) Produce and Disseminate the Results of the Evaluations Activity(ies)

Disseminating results/ findings from an evaluation activity could include publishing reports and making them publicly available; giving briefings to key stakeholders; preparing Infographics, and so on. Whatever the form of the communication, it must be tailored to stakeholders at the level of detail suitable to them to ensure that the communication would be consumed.

(4) Use the Results to Fill Evidence Gaps and Inform Decision Making

Results/findings should then be used to help inform decisions, such as:

- Decisions to make program improvements (e.g., modifications, redesign)

- Decisions to revise program goals and strategy

- Resource allocation decisions

- Decisions to communicate program strategy and value

- Operational decisions

Here is a simplified example of the Learning Agenda process, as it applies to an illustrative question:

| Question asked | Does CMEI R&D STEM education workforce development funding help achieve the goal of positively impacting students' entry into the energy workforce? |

| Evaluation activity: metrics | Knowledge gain, job preparedness, careers pursued, jobs, stay in field, transition to field |

| Evaluation activity: method and data | A specialized impact and process evaluation study, using quasi-experimental evaluation design (comparison groups) and statistical sample data and analysis |

| Produce and disseminate | Publish study report and post on CMEI website, prepare 2-page executive brief for CMEI leadership and others |

| Use results | Program manager uses results to improve the program and to communicate its value to stakeholders |

Tips for Successful Strategically Planned Evaluations

- Plan for high quality, cost-effective evaluations

- Evaluation studies often take six months or more to complete and a schedule helps ensure that all program elements are assessed on a time scale that will provide information timely for decision points.

- Avoid the trap of unnecessarily limiting or constraining the scope of an evaluation study to meet a compressed time schedule. For example, in some cases, results from an evaluation may have to become available every other budget cycle if an in-depth scope of investigation is called for.

- Put in place standard procedures for gathering and validating as much data as possible as a routine part of program record-keeping.

- Combine efforts where you can. For example, do customer satisfaction surveys for all program customers at one time.

- Set aside budget resources for evaluation, including an amount for studies that cannot be predicted. In many Federal government agencies, evaluation activity typically comprises 1% to 10% of a program's budget.

- Establish a procedure to ensure the independence of the evaluation process.

- Have a quality assurance process in place that calls for external review of the study evaluation plan and the draft report and ensures data quality and consistency (especially when evaluation data comes from multiple sources).